Top 5 Patterns and Practices of Service Testing

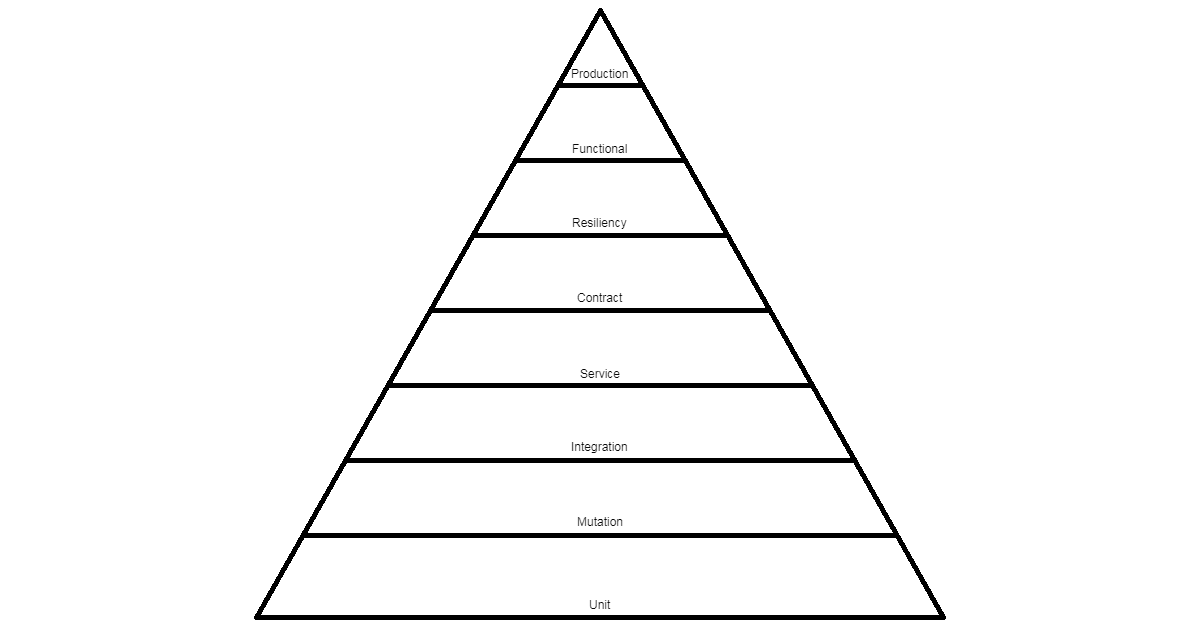

The contemporary testing pyramid includes several more strategies than early models represented. While the relationship between cost, speed, and volume remains the same, today's release pipeline does a heck of a lot more than your old build script did. One of those stages is Service Testing, and its relative importance has increased with the adoption of containers.

What is Service Testing? It is the automated testing that a service functions as expected and meets its business requirements within the context of an overall architecture. For example, service testing an Order Service includes sending a checkoutOrder command to the OrdersController, which in turn accesses the Orders database, and logs to stdout. To pull this off in an automated pipeline, each of the service's components need to be stood up in a production-like manner.

I have seen Service Testing fail for many reasons. But it doesn't have to go that way. There are patterns and practices we may use to help produce highly effective Service Testing. And we want good Service Testing because it verifies that our system does what it is meant to do, using the tools, engines, libraries, images, etc. that they will use in production.

Here are the top 5 Service Testing patterns and practices I promote:

Use Your Testing Framework

Write your Service Tests as you would write your Unit Tests and your Integration Tests - using the very same testing framework. For example, if you write Unit Tests in xUnit, JUnit, Jest, PyTest, etc., then use that for writing your Service Tests. There are many reasons for doing so, including: familiar semantics (i.e., less context switching), consistent pipeline configuration, and most of all better code reading.

Use Your Containers

Stand up your services using similar container configuration they will use in production. This means, for example, your service tests run against a real database, as opposed to an in-memory provider.

A typical pipeline configuration for Service Tests is listed below. This is GitHub Action syntax but the steps are the same for Jenkins, GitLab, Travis, etc.: docker-compose up, docker-compose run, docker-compose down.

Use Your Epics and Stories

Align your Service Tests with your epics and stories. This means organizing your tests according to features. It means naming your tests after your stories, and the bodies of your Service Tests come directly from your acceptance criteria. Having your Service Tests based firmly in what the system is meant to do (your epics and stories) leads to a system that is well-tested at the service level.

Push Often, Test Just as Often

Run your Service Tests just as often as you run your Unit Tests - which should be with every push. The reason for doing so is to produce a system which is always ready to deploy. You may hear that Service Tests and Integration Tests, heck, anything other than Unit Tests, should be run only for PR commits. With all due respect, that's nonsense. Let's face it, the only two reasons we delay running tests are: 1) so that we can push WIP code; and, 2) tests take too long to run and/or strain resources.

There are several things we can do to support running tests with every push:

- Run the pipeline test stages locally prior to push. Take this a step further and use a commit-test-push script that aborts push if tests fail.

- Treat a failed branch build as an opportunity to improve the system, rather than as something to be avoided. The red X is good, not bad.

- Push often. This reduces the vectors of change as well as the failures.

Aim for Full Coverage

I advocate 100% behavior coverage via a combination of Unit Tests and Mutation Tests. Even if you do not reach full coverage, the more coverage you have the more benefit to your Service Tests. This seems logical right? The higher the percentage of tested component behavior, the more likely those components are to integrate as required and expected.

It is quite common for my Service Tests to "just work" - because I commit to full coverage along the way. By the time I get to Service Tests, there is very little left untested - usually in the realm of external behaviors (e.g., native implementation of a database provider).

Conclusion

My guess is that you already do some, or all of the above. Kudos if you do, what I hope you get from this post then is motivation to continue to raise that bar.

If some or all of the above is new to you, what I hope you get from this post is to see how easy and beneficial it is to write Service Tests. To summarize what we've covered:

- Write Service Tests in your normal testing framework.

- Run Service Tests dependent on containers.

- Derive Service Tests directly from stories and epics.

- Execute Service Tests locally, push often.

- Support Service Tests with full Unit Test coverage.

If you have any questions or thoughts you wish to share, hit me up wherever you are social @RjaeEaston.